regularization machine learning meaning

Of course the fancy definition and complicated terminologies are of little worth to a complete beginner. In general regularization means to make things regular or acceptable.

Difference Between Bagging And Random Forest Machine Learning Supervised Machine Learning Learning Problems

Regularization is the concept that is used to fulfill these two objectives mainly.

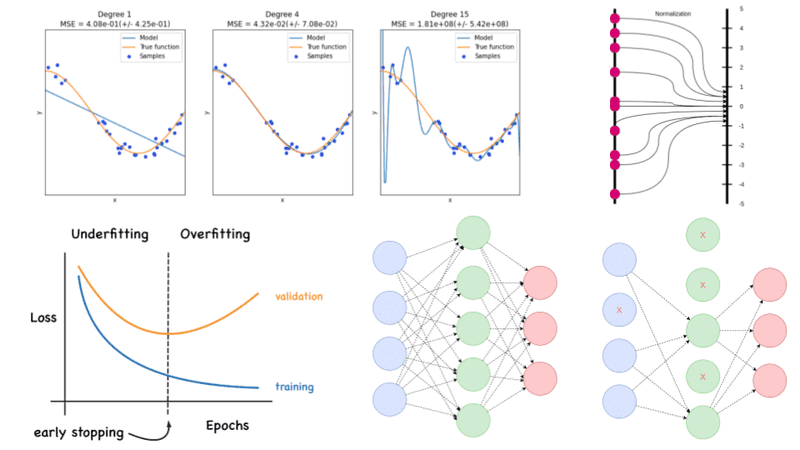

. It is a form of regression that shrinks the coefficient estimates towards zero. The regularization techniques in machine learning are. This technique prevents the model from overfitting by adding extra information to it.

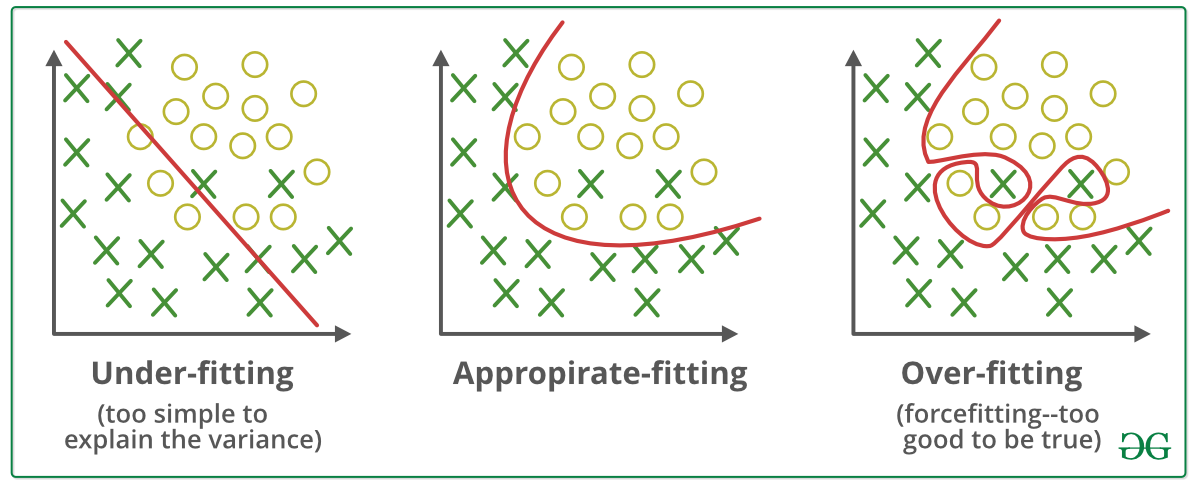

This penalty controls the model complexity - larger penalties equal simpler models. The model will have a low accuracy if it is overfitting. Regularization techniques are used to increase performance by preventing overfitting in the designed model.

With the L2 norm. The regularization parameter in machine learning is λ and has the following features. It means the model is not able to predict the output when.

We can easily penalize the corresponding parameters if we know the set of irrelevant features and eventually overfitting. Regularization in Machine Learning is an important concept and it solves the overfitting problem. While regularization is used with many different machine learning algorithms including deep neural networks in this article we use linear regression to.

Regularization is one of the techniques that is used to control overfitting in high flexibility models. Our Machine Learning model will correspondingly learn n 1 parameters ie. Having the L1 norm.

Regularization helps to solve the problem of overfitting in machine learning. You can refer to this playlist on Youtube for any queries regarding the math behind the concepts in Machine Learning. Concept of regularization.

I have learnt regularization from different sources and I feel learning from different. Regularization is used in machine learning as a solution to overfitting by reducing the variance of the ML model under consideration. In this article titled The Best Guide to Regularization in Machine Learning you will learn all you need to know about regularization.

It is one of the most important concepts of machine learning. In addition there are cases where it is used to reduce the complexity of the model without decreasing the performance. It is a combination of Ridge and Lasso regression.

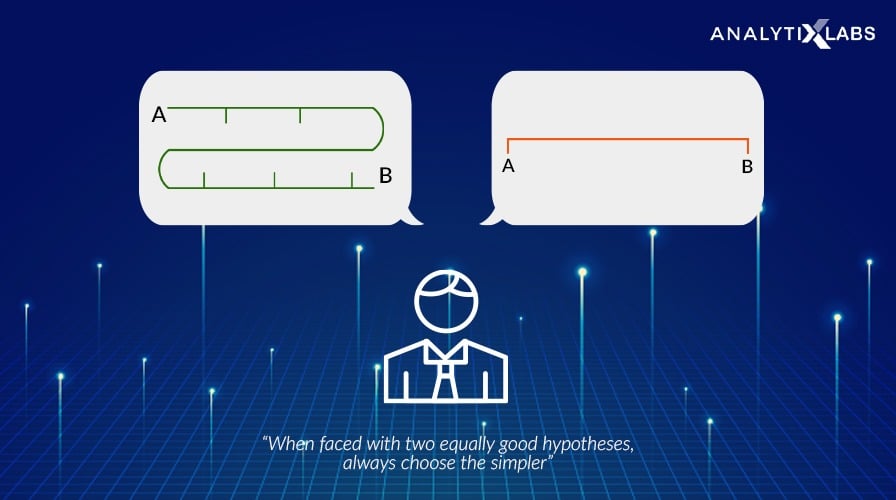

In simple words regularization discourages learning a more complex or flexible model to. Sometimes one resource is not enough to get you a good understanding of a concept. To avoid this we use regularization in machine learning to properly fit a model onto our test set.

We will see how the regularization works and each of these regularization techniques in machine learning below in-depth. Regularization is one of the most important concepts of machine learning. This is a tuning parameter that.

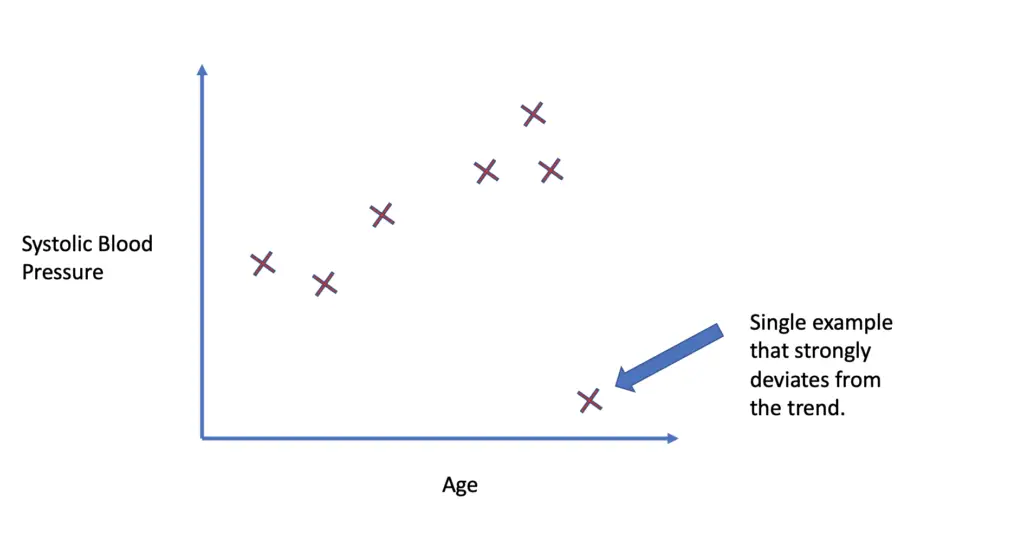

When someone wants to model a problem lets say trying to. Suppose there are a total of n features present in the data. This happens because your model is trying too hard to capture the noise in your training dataset.

The cheat sheet below summarizes different regularization methods. As a result the tuning parameter determines the impact on bias and variance in the regularization procedures discussed above. Regularization can be implemented in multiple ways by either modifying the loss function sampling method or the training approach itself.

In the context of machine learning regularization is the process which regularizes or shrinks the coefficients towards zero. Regularization methods add additional constraints to do two things. In machine learning regularization problems impose an additional penalty on the cost function.

Designing a simpler smaller-sized model while maintaining the same performance rate is often important where. Sometimes the machine learning model performs well with the training data but does not perform well with the test data. L2 Machine Learning Regularization uses Ridge regression which is a model tuning method used for analyzing data with multicollinearity.

Poor performance can occur due to either overfitting or underfitting the data. It is a technique to prevent the model from overfitting by adding extra information to it. Solve an ill-posed problem a problem without a unique and stable solution Prevent model overfitting.

The major concern while training your neural network or any machine learning model is to avoid overfitting. How well a model fits training data determines how well it performs on unseen data. By noise we mean the data points that dont really represent.

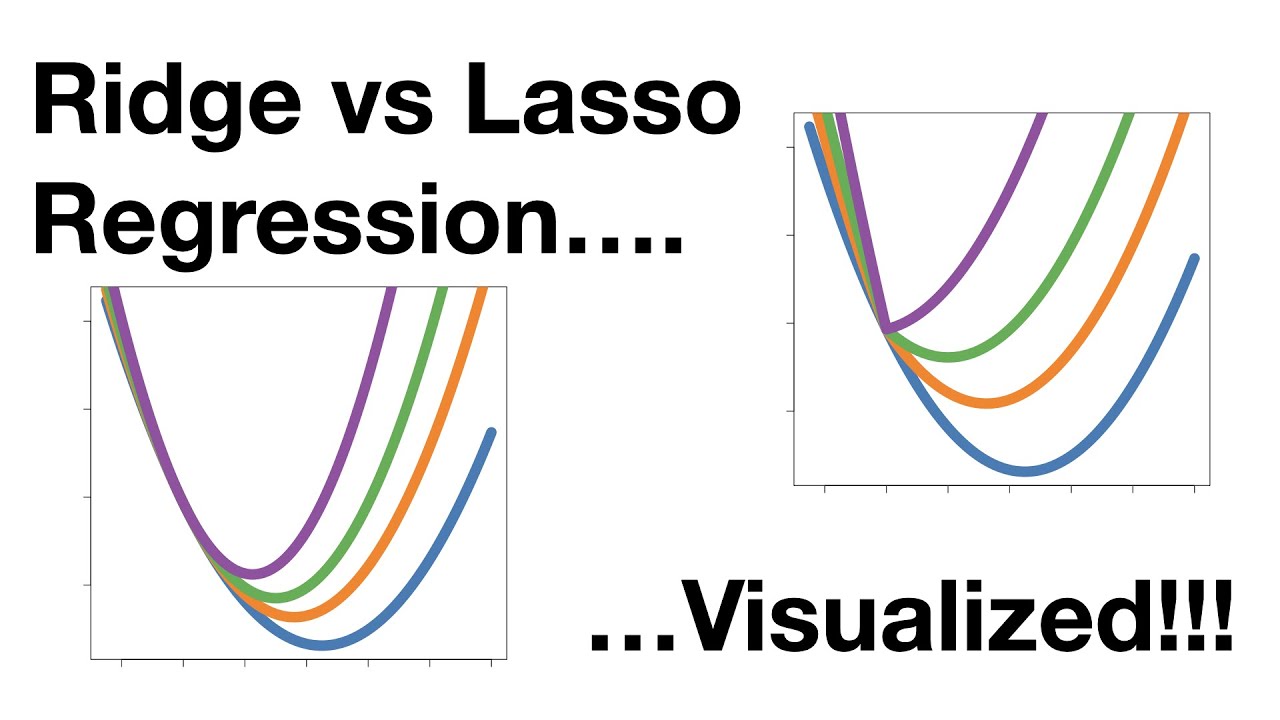

Regularized cost function and Gradient Descent. In Lasso regression the model is penalized by the sum of absolute values of the weights whereas in Ridge regression the model is penalized for the sum of squared values of the weights of coefficient. Answer 1 of 38.

Of course the fancy definition and complicated terminologies are of little worth to a complete beginner. How well a model fits training data determines how well it performs on unseen data. The concept of regularization is widely used even outside the machine learning domain.

Overfitting is a phenomenon where the model accounts for all of the points in the training dataset. As the value of the tuning parameter increases the value of the coefficients decreases lowering the. Instead of beating ourselves over it why not attempt to.

L2 regularization or Ridge Regression. Regularization in Machine Learning greatly reduces the models variance without significantly increasing its bias. The model will not be.

It is very important to understand regularization to train a good model. It tries to impose a higher penalty on the variable having higher values and hence it controls the strength of the penalty term of the linear regression. Regularization is a technique used in an attempt to solve the overfitting 1 problem in statistical models First of all I want to clarify how this problem of overfitting arises.

One of the major aspects of training your machine learning model is avoiding overfitting. Using cross-validation to determine the regularization coefficient. In other words this technique forces us not to learn a more complex or flexible model to avoid the problem of.

Regularization techniques help reduce the chance of overfitting and help us get an optimal model. Regularization in Machine Learning. This is exactly why we use it for applied machine learning.

Regularization in Machine Learning. In general regularization involves augmenting the input information to enforce generalization. L1 regularization or Lasso Regression.

In machine learning regularization problems impose an additional penalty on the cost function.

A Tour Of Machine Learning Algorithms

Regularization In Machine Learning Simplilearn

A Simple Explanation Of Regularization In Machine Learning Nintyzeros

What Is Regularization In Machine Learning Techniques Methods

What Is Regularization In Machine Learning Techniques Methods

Regularization In Machine Learning Simplilearn

Regularization In Machine Learning Programmathically

What Is Regularizaton In Machine Learning

Regularization In Machine Learning Programmathically

Regularization Techniques For Training Deep Neural Networks Ai Summer

Regularization In Machine Learning Simplilearn

Regularization In Machine Learning Simplilearn

L2 Vs L1 Regularization In Machine Learning Ridge And Lasso Regularization

Regularization In Machine Learning Regularization In Java Edureka

Regularization In Machine Learning Regularization In Java Edureka

What Is Regularizaton In Machine Learning

Regularization In Machine Learning Regularization Example Machine Learning Tutorial Simplilearn Youtube